I am currently working to patch something in colors.py and I am coming across a lot of older style code and code that duplicates functionality that can be found in cbook.py (particularly the type-checking functions). Is there a standing rule that code that we come across should get updated or adapted to use the functions in cbook.py?

Or is it the other way around and that we should be avoiding cbook.py?

Thanks,

Ben Root

You should be using as much centralized functionality (eg cbook) as

possible and clean-ups are welcome. Just make sure if you remove a

func that may be outward facing (eg a duplicate function from

colors.py) that you deprecate it with a warning and note it in the

log, and that the regression tests are passing.

Thanks for the efforts!

JDH

···

On Thu, Aug 12, 2010 at 2:34 PM, Benjamin Root <ben.root@...553...> wrote:

I am currently working to patch something in colors.py and I am coming

across a lot of older style code and code that duplicates functionality that

can be found in cbook.py (particularly the type-checking functions). Is

there a standing rule that code that we come across should get updated or

adapted to use the functions in cbook.py?

Or is it the other way around and that we should be avoiding cbook.py?

Ok, good to know.

Btw, the current set of tests has a failure for testing pcolormesh. Wasn’t there a change fairly recently to fix a problem with pcolormesh, so that the test image should now be updated?

Ben Root

···

On Thu, Aug 12, 2010 at 2:39 PM, John Hunter <jdh2358@…149…> wrote:

On Thu, Aug 12, 2010 at 2:34 PM, Benjamin Root <ben.root@…553…> wrote:

I am currently working to patch something in colors.py and I am coming

across a lot of older style code and code that duplicates functionality that

can be found in cbook.py (particularly the type-checking functions). Is

there a standing rule that code that we come across should get updated or

adapted to use the functions in cbook.py?

Or is it the other way around and that we should be avoiding cbook.py?

You should be using as much centralized functionality (eg cbook) as

possible and clean-ups are welcome. Just make sure if you remove a

func that may be outward facing (eg a duplicate function from

colors.py) that you deprecate it with a warning and note it in the

log, and that the regression tests are passing.

Thanks for the efforts!

JDH

Btw, the current set of tests has a failure for testing pcolormesh.

Wasn't there a change fairly recently to fix a problem with pcolormesh,

so that the test image should now be updated?

Mike did update the images a couple weeks ago, and when I run the tests, I don't get that failure. Is it possible that you have not done a clean rebuild and install since Mike's changes?

Eric

···

On 08/12/2010 09:56 AM, Benjamin Root wrote:

Ben Root

Visually, I can’t see the difference between the expected and the generated images, but the test system is reporting a RMS of 116.512. I have tried completely clean installs of the trunk version of matplotlib. The same happens for completely clean installs of the maintenance branch as well.

Ben Root

···

On Thu, Aug 12, 2010 at 3:58 PM, Eric Firing <efiring@…552…229…> wrote:

On 08/12/2010 09:56 AM, Benjamin Root wrote:

Btw, the current set of tests has a failure for testing pcolormesh.

Wasn’t there a change fairly recently to fix a problem with pcolormesh,

so that the test image should now be updated?

Mike did update the images a couple weeks ago, and when I run the tests,

I don’t get that failure. Is it possible that you have not done a clean

rebuild and install since Mike’s changes?

Eric

> Btw, the current set of tests has a failure for testing pcolormesh.

> Wasn't there a change fairly recently to fix a problem with

pcolormesh,

> so that the test image should now be updated?

Mike did update the images a couple weeks ago, and when I run the tests,

I don't get that failure. Is it possible that you have not done a clean

rebuild and install since Mike's changes?

Eric

Visually, I can't see the difference between the expected and the

generated images, but the test system is reporting a RMS of 116.512. I

have tried completely clean installs of the trunk version of

matplotlib. The same happens for completely clean installs of the

maintenance branch as well.

This may be a limitation of the test system, then. I think that differences in external libraries can cause subtle output differences, triggering a false failure alarm. Maybe the tolerance needs to be increased for this particular test.

Eric

···

On 08/13/2010 06:20 AM, Benjamin Root wrote:

On Thu, Aug 12, 2010 at 3:58 PM, Eric Firing <efiring@...229... > <mailto:efiring@…229…>> wrote:

On 08/12/2010 09:56 AM, Benjamin Root wrote:

Ben Root

Or maybe there is a bug in how it is calculating the differences? I am flipping back and forth between the images in eog and I can’t figure out what is different.

Ben Root

···

On Fri, Aug 13, 2010 at 12:11 PM, Eric Firing <efiring@…229…> wrote:

On 08/13/2010 06:20 AM, Benjamin Root wrote:

On Thu, Aug 12, 2010 at 3:58 PM, Eric Firing <efiring@…229… > > mailto:efiring@...229...> wrote:

On 08/12/2010 09:56 AM, Benjamin Root wrote:

> Btw, the current set of tests has a failure for testing pcolormesh.

> Wasn't there a change fairly recently to fix a problem with

pcolormesh,

> so that the test image should now be updated?

Mike did update the images a couple weeks ago, and when I run the tests,

I don't get that failure. Is it possible that you have not done a clean

rebuild and install since Mike's changes?

Eric

Visually, I can’t see the difference between the expected and the

generated images, but the test system is reporting a RMS of 116.512. I

have tried completely clean installs of the trunk version of

matplotlib. The same happens for completely clean installs of the

maintenance branch as well.

This may be a limitation of the test system, then. I think that

differences in external libraries can cause subtle output differences,

triggering a false failure alarm. Maybe the tolerance needs to be

increased for this particular test.

Eric

>

> > Btw, the current set of tests has a failure for testing pcolormesh.

> > Wasn't there a change fairly recently to fix a problem with

> pcolormesh,

> > so that the test image should now be updated?

>

> Mike did update the images a couple weeks ago, and when I run

the tests,

> I don't get that failure. Is it possible that you have not

done a clean

> rebuild and install since Mike's changes?

>

> Eric

>

> Visually, I can't see the difference between the expected and the

> generated images, but the test system is reporting a RMS of

116.512. I

> have tried completely clean installs of the trunk version of

> matplotlib. The same happens for completely clean installs of the

> maintenance branch as well.

This may be a limitation of the test system, then. I think that

differences in external libraries can cause subtle output differences,

triggering a false failure alarm. Maybe the tolerance needs to be

increased for this particular test.

Eric

Or maybe there is a bug in how it is calculating the differences? I am

flipping back and forth between the images in eog and I can't figure out

what is different.

Can you see it in the actual difference png?

If it were a problem in calculating the difference, I would expect it to be consistent among systems and to show up in all image comparison tests.

Eric

···

On 08/13/2010 07:20 AM, Benjamin Root wrote:

On Fri, Aug 13, 2010 at 12:11 PM, Eric Firing <efiring@...229... > <mailto:efiring@…229…>> wrote:

On 08/13/2010 06:20 AM, Benjamin Root wrote:

> On Thu, Aug 12, 2010 at 3:58 PM, Eric Firing <efiring@...229... > <mailto:efiring@…229…> > > <mailto:efiring@…229…>> wrote:

> On 08/12/2010 09:56 AM, Benjamin Root wrote:

Ben Root

Where are the difference images saved to? Or do I have to pass an option to matplotlib.test() to generate those? I only have a directory “result_images” with directories of expected and resulting images.

Ben Root

···

On Fri, Aug 13, 2010 at 12:31 PM, Eric Firing <efiring@…229…> wrote:

On 08/13/2010 07:20 AM, Benjamin Root wrote:

On Fri, Aug 13, 2010 at 12:11 PM, Eric Firing <efiring@…229… > > mailto:efiring@...229...> wrote:

On 08/13/2010 06:20 AM, Benjamin Root wrote:

>

>

> On Thu, Aug 12, 2010 at 3:58 PM, Eric Firing <efiring@...229... > > > <mailto:efiring@...876......> > > > <mailto:efiring@...229... <mailto:efiring@...229...>>> wrote:

>

> On 08/12/2010 09:56 AM, Benjamin Root wrote:

>

> > Btw, the current set of tests has a failure for testing pcolormesh.

> > Wasn't there a change fairly recently to fix a problem with

> pcolormesh,

> > so that the test image should now be updated?

>

> Mike did update the images a couple weeks ago, and when I run

the tests,

> I don't get that failure. Is it possible that you have not

done a clean

> rebuild and install since Mike's changes?

>

> Eric

>

>

> Visually, I can't see the difference between the expected and the

> generated images, but the test system is reporting a RMS of

116.512. I

> have tried completely clean installs of the trunk version of

> matplotlib. The same happens for completely clean installs of the

> maintenance branch as well.

This may be a limitation of the test system, then. I think that

differences in external libraries can cause subtle output differences,

triggering a false failure alarm. Maybe the tolerance needs to be

increased for this particular test.

Eric

Or maybe there is a bug in how it is calculating the differences? I am

flipping back and forth between the images in eog and I can’t figure out

what is different.

Can you see it in the actual difference png?

If it were a problem in calculating the difference, I would expect it to

be consistent among systems and to show up in all image comparison tests.

Eric

Ben Root

FYI, the code is in lib/matplotlib/testing/compare.py, and the diff

images on failure are placed in, for example,

./result_images/test_axes/failed-diff-pcolormesh.png

I am not seeing failures on an ubuntu linux box I just tested on.

JDH

···

On Fri, Aug 13, 2010 at 12:53 PM, Benjamin Root <ben.root@...553...> wrote:

Where are the difference images saved to? Or do I have to pass an option to

matplotlib.test() to generate those? I only have a directory

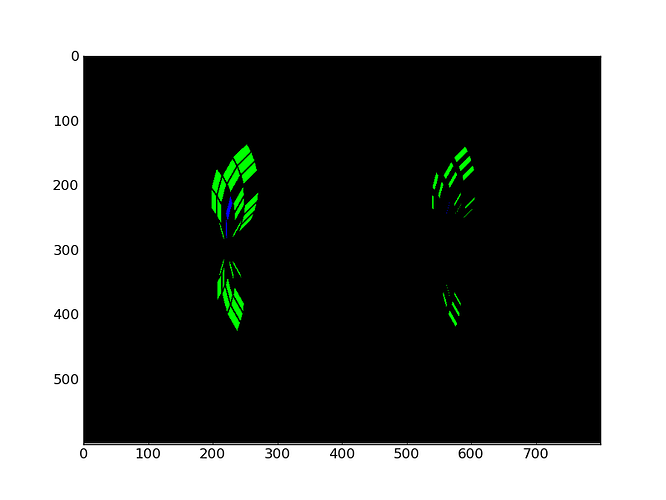

Found it. It is solid black.

Ben Root

···

On Fri, Aug 13, 2010 at 1:18 PM, John Hunter <jdh2358@…149…> wrote:

On Fri, Aug 13, 2010 at 12:53 PM, Benjamin Root <ben.root@…553…> wrote:

Where are the difference images saved to? Or do I have to pass an option to

matplotlib.test() to generate those? I only have a directory

FYI, the code is in lib/matplotlib/testing/compare.py, and the diff

images on failure are placed in, for example,

./result_images/test_axes/failed-diff-pcolormesh.png

I am not seeing failures on an ubuntu linux box I just tested on.

JDH

Not quite:

In [95]: im = imread('/home/titan/johnh/Downloads/failed-diff-pcolormesh.png').ravel()

In [96]: im.max()

Out[96]: 0.039215688

In [97]: im.min()

Out[97]: 0.0

···

On Fri, Aug 13, 2010 at 1:26 PM, Benjamin Root <ben.root@...553...> wrote:

Found it. It is solid black.

Probably we should increase the threshold as Eric suggested, but I

wonder if the different test results we are getting could be due to

PIL version.

In [6]: import Image

In [7]: Image.VERSION

Out[7]: '1.1.7'

JDH

···

On Fri, Aug 13, 2010 at 1:34 PM, John Hunter <jdh2358@...149...> wrote:

On Fri, Aug 13, 2010 at 1:26 PM, Benjamin Root <ben.root@...553...> wrote:

Found it. It is solid black.

Not quite:

In [95]: im = imread('/home/titan/johnh/Downloads/failed-diff-pcolormesh.png').ravel()

In [96]: im.max()

Out[96]: 0.039215688

In [97]: im.min()

Out[97]: 0.0

Ah, good catch.

Well, “enhancing” the difference yields this.

Maybe the colors were off?

Ben

P.S. - Image.VERSION == ‘1.1.6’

So maybe it is something regarding different PIL versions?

···

On Fri, Aug 13, 2010 at 1:34 PM, John Hunter <jdh2358@…149…> wrote:

On Fri, Aug 13, 2010 at 1:26 PM, Benjamin Root <ben.root@…553…> wrote:

Found it. It is solid black.

Not quite:

In [95]: im = imread(‘/home/titan/johnh/Downloads/failed-diff-pcolormesh.png’).ravel()

In [96]: im.max()

Out[96]: 0.039215688

In [97]: im.min()

Out[97]: 0.0